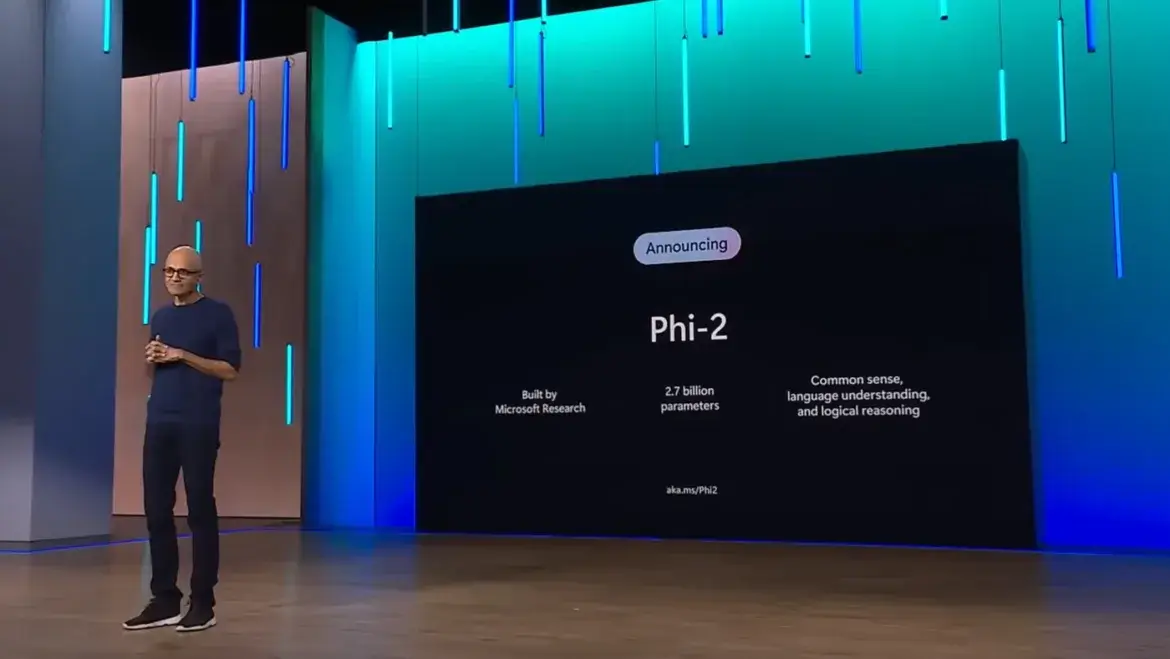

Microsoft has taken another significant step forward in the world of artificial intelligence with the release of Phi-2, a small language model (SLM) that packs a surprising punch. While its size may be modest (2.7 billion parameters), its capabilities rival those of much larger models, making it a compelling option for research, development, and even potential future applications.

“With only 2.7 billion parameters, Phi-2 surpasses the performance of Mistral and Llama-2 models at 7B and 13B parameters on various aggregated benchmarks,” Microsoft said, throwing in a low blow for Google’s newest AI model: “Furthermore, Phi-2 matches or outperforms the recently-announced Google Gemini Nano 2, despite being smaller in size.”

Phi-2’s Key Strengths:

- Exceptional Performance: Despite its small size, Phi-2 demonstrates remarkable reasoning and language understanding, exceeding the performance of larger models like Llama 2 and Mistral on various benchmarks. This impressive performance is attributed to Microsoft’s focus on high-quality training data and advanced scaling techniques.

- Efficiency: Unlike large language models (LLMs) that require significant computational resources, Phi-2 can be run on low-tier equipment, including laptops and potentially even smartphones. This opens up exciting possibilities for various applications, particularly in mobile environments.

- Accessibility: Microsoft has made Phi-2 available in the Azure AI Studio model catalog, further democratizing AI research and development. This allows researchers and developers to easily access and experiment with Phi-2, accelerating progress in the field of AI.

Phi-2 vs. the Competition:

- Outperforms Google’s Gemini Nano 2: While Google’s Gemini Nano 2 boasts 3.2 billion parameters, Phi-2 outperforms it on several benchmarks, demonstrating that size isn’t everything when it comes to AI models.

- Competes with Larger Models: Phi-2’s performance rivals that of models up to 25 times its size, suggesting that bigger isn’t always better. This opens up possibilities for developing powerful AI solutions that are more efficient and easier to deploy.

Beyond Model Development:

Microsoft’s approach to AI extends beyond model development. The company has also introduced custom chips, Maia and Cobalt, further showcasing its commitment to fully integrating AI and cloud computing. These optimized chips contribute to Microsoft’s vision of harmonizing hardware and software capabilities, placing them in direct competition with Google and Apple in the AI hardware space.

The Future of Phi-2:

While currently limited to research purposes, Phi-2’s potential for future applications is significant. Its small size and efficient operation make it a promising candidate for various real-world scenarios, such as:

- Personal assistants: Imagine having a powerful AI assistant that can handle complex tasks and provide insightful information, all without draining your smartphone battery.

- Education and learning: Phi-2’s ability to understand and explain complex concepts could revolutionize education, making personalized learning experiences more accessible and engaging.

- Accessibility tools: Phi-2 can be used to develop tools that assist people with disabilities, promoting greater inclusivity and independence.

Conclusion:

Microsoft’s Phi-2 represents a significant advancement in the realm of AI. Its remarkable capabilities, efficient operation, and accessibility make it a valuable tool for research and development, and its potential for future applications is vast. As the world of AI continues to evolve, Phi-2 stands as a testament to the power of smaller, smarter models and their potential to revolutionize various aspects of our lives.

You can learn more about Phi-2 here.

Leave a Reply